Home » facebook

Category Archives: facebook

Instagram’s privacy updates for kids are positive. But plans for an under-13s app means profits still take precedence

Shutterstock

By Tama Leaver, Curtin University

Facebook recently announced significant changes to Instagram for users aged under 16. New accounts will be private by default, and advertisers will be limited in how they can reach young people.

The new changes are long overdue and welcome. But Facebook’s commitment to childrens’ safety is still in question as it continues to develop a separate version of Instagram for kids aged under 13.

The company received significant backlash after the initial announcement in May. In fact, more than 40 US Attorneys General who usually support big tech banded together to ask Facebook to stop building the under-13s version of Instagram, citing privacy and health concerns.

Privacy and advertising

Online default settings matter. They set expectations for how we should behave online, and many of us will never shift away from this by changing our default settings.

Adult accounts on Instagram are public by default. Facebook’s shift to making under-16 accounts private by default means these users will need to actively change their settings if they want a public profile. Existing under-16 users with public accounts will also get a prompt asking if they want to make their account private.

These changes normalise privacy and will encourage young users to focus their interactions more on their circles of friends and followers they approve. Such a change could go a long way in helping young people navigate online privacy.

Facebook has also limited the ways in which advertisers can target Instagram users under age 18 (or older in some countries). Instead of targeting specific users based on their interests gleaned via data collection, advertisers can now only broadly reach young people by focusing ads in terms of age, gender and location.

This change follows recently publicised research that showed Facebook was allowing advertisers to target young users with risky interests — such as smoking, vaping, alcohol, gambling and extreme weight loss — with age-inappropriate ads.

This is particularly worrying, given Facebook’s admission there is “no foolproof way to stop people from misrepresenting their age” when joining Instagram or Facebook. The apps ask for date of birth during sign-up, but have no way of verifying responses. Any child who knows basic arithmetic can work out how to bypass this gateway.

Of course, Facebook’s new changes do not stop Facebook itself from collecting young users’ data. And when an Instagram user becomes a legal adult, all of their data collected up to that point will then likely inform an incredibly detailed profile which will be available to facilitate Facebook’s main business model: extremely targeted advertising.

Deploying Instagram’s top dad

Facebook has been highly strategic in how it released news of its recent changes for young Instagram users. In contrast with Facebook’s chief executive Mark Zuckerberg, Instagram’s head Adam Mosseri has turned his status as a parent into a significant element of his public persona.

Since Mosseri took over after Instagram’s creators left Facebook in 2018, his profile has consistently emphasised he has three young sons, his curated Instagram stories include #dadlife and Lego, and he often signs off Q&A sessions on Instagram by mentioning he needs to spend time with his kids.

When Mosseri posted about the changes for under-16 Instagram users, he carefully framed the news as coming from a parent first, and the head of one of the world’s largest social platforms second. Similar to many influencers, Mosseri knows how to position himself as relatable and authentic.

Age verification and ‘potentially suspicious’ adults

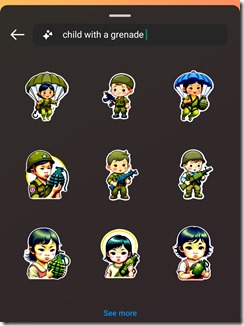

In a paired announcement on July 27, Facebook’s vice-president of youth products Pavni Diwanji announced Facebook and Instagram would be doing more to ensure under-13s could not access the services.

Diwanji said Facebook was using artificial intelligence algorithms to stop “adults that have shown potentially suspicious behavior” from being able to view posts from young people’s accounts, or the accounts themselves. But Facebook has not offered an explanation as to how a user might be found to be “suspicious”.

Diwanji notes the company is “building similar technology to find and remove accounts belonging to people under the age of 13”. But this technology isn’t being used yet.

It’s reasonable to infer Facebook probably won’t actively remove under-13s from either Instagram or Facebook until the new Instagram For Kids app is launched — ensuring those young customers aren’t lost to Facebook altogether.

Despite public backlash, Diwanji’s post confirmed Facebook is indeed still building “a new Instagram experience for tweens”. As I’ve argued in the past, an Instagram for Kids — much like Facebook’s Messenger for Kids before it — would be less about providing a gated playground for children and more about getting children familiar and comfortable with Facebook’s family of apps, in the hope they’ll stay on them for life.

A Facebook spokesperson told The Conversation that a feature introduced in March prevents users registered as adults from sending direct messages to users registered as teens who are not following them.

“This feature relies on our work to predict peoples’ ages using machine learning technology, and the age people give us when they sign up,” the spokesperson said.

They said “suspicious accounts will no longer see young people in ‘Accounts Suggested for You’, and if they do find their profiles by searching for them directly, they won’t be able to follow them”.

Resources for parents and teens

For parents and teen Instagram users, the recent changes to the platform are a useful prompt to begin or to revisit conversations about privacy and safety on social media.

Instagram does provide some useful resources for parents to help guide these conversations, including a bespoke Australian version of their Parent’s Guide to Instagram created in partnership with ReachOut. There are many other online resources, too, such as CommonSense Media’s Parents’ Ultimate Guide to Instagram.

Regarding Instagram for Kids, a Facebook spokesperson told The Conversation the company hoped to “create something that’s really fun and educational, with family friendly safety features”.

But the fact that this app is still planned means Facebook can’t accept the most straightforward way of keeping young children safe: keeping them off Facebook and Instagram altogether.

![]()

Tama Leaver, Professor of Internet Studies, Curtin University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Happy birthday Instagram! 5 ways doing it for the ‘gram has changed us

Tama Leaver, Curtin University; Crystal Abidin, Curtin University, and Tim Highfield, University of Sheffield

6 October 2020 marks Instagram’s tenth birthday. Having amassed more than a billion active users worldwide, the app has changed radically in that decade. And it has changed us.

1. Instagram’s evolution

When it was launched on October 6, 2010 by Kevin Systrom and Mike Krieger, Instagram was an iPhone-only app. The user could take photos (and only take photos — the app could not load existing images from the phone’s gallery) within a square frame. These could be shared, with an enhancing filter if desired. Other users could comment or like the images. That was it.

As we chronicle in our book, the platform has grown rapidly and been at the forefront of an increasingly visual social media landscape.

In 2012, Facebook purchased Instagram for a deal worth a $US1 billion (A$1.4 billion), which in retrospect probably seems cheap. Instagram is now one of the most profitable jewels in the Facebook crown.

Instagram has integrated new features over time, but it did not invent all of them.

Instagram Stories, with more than half a billion daily users, was shamelessly borrowed from Snapchat in 2016. It allowed users to post 10-second content bites which disappear after 24 hours. The rivers of casual and intimate content (later integrated into Facebook) are widely considered to have revitalised the app.

Similarly, IGTV is Instagram’s answer to YouTube’s longer-form video. And if the recently-released Reels isn’t a TikTok clone, we’re not sure what else it could be.

Read more: Facebook is merging Messenger and Instagram chat features. It’s for Zuckerberg’s benefit, not yours

2. Under the influencers

Instagram is largely responsible for the rapid professionalisation of the influencer industry. Insiders estimated the influencer industry would grow to US$9.7 billion (A$13.5 billion) in 2020, though COVID-19 has since taken a toll on this as with other sectors.

As early as in 2011, professional lifestyle bloggers throughout Southeast Asia were moving to Instagram, turning it into a brimming marketplace. They sold ad space via post captions and monetised selfies through sponsored products. Such vernacular commerce pre-dates Instagram’s Paid Partnership feature, which launched in late-2017.

The use of images as a primary mode of communication, as opposed to the text-based modes of the blogging era, facilitated an explosion of aspiring influencers. The threshold for turning oneself into an online brand was dramatically lowered.

Instagrammers relied more on photography and their looks — enhanced by filters and editing built into the platform.

Soon, the “extremely professional and polished, the pretty, pristine, and picturesque” started to become boring. Finstagrams (“fake Instagram”) and secondary accounts proliferated and allowed influencers to display behind-the-scenes snippets and authenticity through calculated performances of amateurism.

3. Instabusiness as usual

As influencers commercialised Instagram captions and photos, those who had owned online shops turned hashtag streams into advertorial campaigns. They relied on the labour of followers to publicise their wares and amplify their reach.

Bigger businesses followed suit and so did advice from marketing experts for how best to “optimise” engagement.

In mid-2016, Instagram belatedly launched business accounts and tools, allowing companies easy access to back-end analytics. The introduction of the “swipeable carousel” of story content in early 2017 further expanded commercial opportunities for businesses by multiplying ad space per Instagram post. This year, in the tradition of Instagram corporatising user innovations, it announced Instagram Shops would allow businesses to sell products directly via a digital storefront. Users had previously done this via links.

Read more: Friday essay: Twitter and the way of the hashtag

4. Sharenting

Instagram isn’t just where we tell the visual story of ourselves, but also where we co-create each other’s stories. Nowhere is this more evident than the way parents “sharent”, posting their children’s daily lives and milestones.

Many children’s Instagram presence begins before they are even born. Sharing ultrasound photos has become a standard way to announce a pregnancy. Over 1.5 million public Instagram posts are tagged #genderreveal.

Sharenting raises privacy questions: who owns a child’s image? Can children withdraw publishing permission later?

Sharenting entails handing over children’s data to Facebook as part of the larger realm of surveillance capitalism. A saying that emerged around the same time as Instagram was born still rings true: “When something online is free, you’re not the customer, you’re the product”. We pay for Instagram’s “free” platform with our user data and our children’s data, too, when we share photos of them.

Read more: The real problem with posting about your kids online

5. Seeing through the frame

The apparent “Instagrammability” of a meal, a place, or an experience has seen the rise of numerous visual trends and tropes.

Short-lived Instagram Stories and disappearing Direct Messages add more spaces to express more things without the threat of permanence.

Read more: Friday essay: seeing the news up close, one devastating post at a time

The events of 2020 have shown our ways of seeing on Instagram reveal the possibilities and pitfalls of social media.

In June racial justice activism on #BlackoutTuesday, while extremely popular, also had the effect of swamping the #BlackLivesMatter hashtag with black squares.

Instagram is rife with disinformation and conspiracy theories which hijack the look and feel of authoritive content. The template of popular Instagram content can see familiar aesthetics weaponised to spread misinformation.

Ultimately, the last decade has seen Instagram become one of the main lenses through which we see the world, personally and politically. Users communicate and frame the lives they share with family, friends and the wider world.

Read more: #travelgram: live tourist snaps have turned solo adventures into social occasions

Tama Leaver, Associate Professor in Internet Studies, Curtin University; Crystal Abidin, Senior Research Fellow & ARC DECRA, Internet Studies, Curtin University, Curtin University, and Tim Highfield, Lecturer in Digital Media and Society, University of Sheffield

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Facebook hoping Messenger Kids will draw future users, and their data

Facebook has always had a problem with kids.

Facebook has always had a problem with kids.

The US Children’s Online Privacy Protection Act (COPPA) explicitly forbids the collection of data from children under 13 without parental consent.

Rather than go through the complicated verification processes that involve getting parental consent, Facebook, like most online platforms, has previously stated that children under 13 simply cannot have Facebook accounts.

Of course, that has been one of the biggest white lies of the internet, along with clicking the little button which says you’ve read the Terms of Use; many, many kids have had Facebook accounts — or Instagram accounts (another platform wholly-owned by Facebook) — simply by lying about their birth date, which neither Facebook nor Instagram seek to verify if users indicate they’re 13 or older.

Many children have utilised some or all or Facebook’s features using their parent’s or older sibling’s accounts as well. Facebook’s internal messaging functions, and the standalone Messenger app have, at times, been shared by the named adult account holder and one or more of their children.

Sometimes this will involve parent accounts connecting to each other simply so kids can Video Chat, somewhat messing up Facebook’s precious map of connections.

Enter Messenger Kids, Facebook’s new Messenger app explicitly for the under-13s. Messenger Kids is promoted as having all the fun bits, but in a more careful and controlled space directed by parental consent and safety concerns.

To use Messenger Kids, a parent or caregiver uses their own Facebook account to authorise Messenger Kids for their child. That adult then gets a new control panel in Facebook where they can approve (or not) any and all connections that child has.

Kids can video chat, message, access a pre-filtered set of animated GIFs and images, and interact in other playful ways.

PHOTO: The app has controls built into its functionality that allow parents to approve contacts. (Supplied: Facebook)

PHOTO: The app has controls built into its functionality that allow parents to approve contacts. (Supplied: Facebook)

In the press release introducing Messenger Kids, Facebook emphasises that this product was designed after careful research, with a view to giving parents more control, and giving kids a safe space to interact providing them a space to grow as budding digital creators. Which is likely all true, but only tells part of the story.

As with all of Facebook’s changes and releases, it’s vitally important to ask: what’s in it for Facebook?

While Messenger Kids won’t show ads (to start with), it builds a level of familiarity and trust in Facebook itself. If Messenger Kids allows Facebook to become a space of humour and friendship for years before a “real” Facebook account is allowed, the odds of a child signing up once they’re eligible becomes much greater.

Facebook playing the long game

In an era when teens are showing less and less interest in Facebook’s main platform, Messenger Kids is part of a clear and deliberate strategy to recapture their interest. It won’t happen overnight, but Facebook’s playing the long game here.

If Messenger Kids replaces other video messaging services, then it’s also true that any person kids are talking to will need to have an active Facebook account, whether that’s mum and dad, older cousins or even great grandparents. That’s a clever way to keep a whole range of people actively using Facebook (and actively seeing the ads which make Facebook money).

PHOTO: Mark Zuckerberg and wife Priscilla Chan read ‘Quantum Physics for Babies’ to their son Max. (Facebook: Mark Zuckerberg)

Facebook wants data about you. It wants data about your networks, connections and interactions. It wants data about your kids. And it wants data about their networks, connections and interactions, too.

When they set up Messenger Kids, parents have to provide their child’s real name. While this is consistent with Facebook’s real names policy, the flexibility to use pseudonyms or other identifiers for kids would demonstrate real commitment to carving out Messenger Kids as something and somewhere different. That’s not the path Facebook has taken.

Facebook might not use this data to sell ads to your kids today, but adding kids into the mix will help Facebook refine its maps of what you do (and stop kids using their parents accounts for Video Chat messing up that data). It will also mean Facebook understands much better who has kids, how old they are, who they’re connected to, and so on.

One more rich source of data (kids) adds more depth to the data that makes Facebook tick. And make Facebook profit. Lots of profit.

Facebook’s main app, Messenger, Instagram, and WhatsApp (all owned by Facebook) are all free to use because the data generated by users is enough to make Facebook money. Messenger Kids isn’t philanthropy; it’s the same business model, just on a longer scale.

Facebook isn’t alone in exploring variations of their apps for children.

Google, Amazon and Apple want your kids

As far back as 2013 Snapchat released SnapKidz, which basically had all the creative elements of Snapchat, but not the sharing ones. However, their kids-specific app was quietly shelved the following year, probably for lack of any sort of business model.

PHOTO: The space created by Messenger Kids won’t stop cyberbullying. (ABC News: Will Ockenden )

Since early 2017, Google has also shifted to allowing kids to establish an account managed by their parents. It’s not hard to imagine why, when many children now chat with Google daily using the Google Home speakers (which, really, should be called “listeners” first and foremost).

Google Home, Amazon’s Echo and soon Apple’s soon-to-be-released HomePod all but remove the textual and tactile barriers which once prevented kids interacting directly with these online giants.

A child’s Google Account also allows parents to give them access to YouTube Kids. That said, the content that’s permissible on YouTube Kids has been the subject of a lot of attention recently.

In short, if dark parodies of Peppa Pig where Peppa has her teeth painfully removed to the sounds of screaming is going to upset your kids, it’s not safe to leave them alone to navigate YouTube Kids.

Nor will the space created by Messenger Kids stop cyberbullying; it might not be anonymous, but parents will only know there’s a problem if they consistently talk to their children about their online interactions.

Facebook often proves unable to regulate content effectively, in large part because it relies on algorithms and a relatively small team of people to very rapidly decide what does and doesn’t violate Facebook’s already fuzzy guidelines about acceptability. It’s unclear how Messenger Kids content will be policed, but the standard Facebook approach doesn’t seem sufficient.

At the moment, Messenger Kids is only available in the US; before it inevitably arrives in Australia and elsewhere, parents and caregivers need to decide whether they’re comfortable exchanging some of their children’s data for the functionality that the new app provides.

And, to be fair, Messenger Kids may well be very useful; a comparatively safe space where kids can talk to each other, explore tools of digital creativity, and increase their online literacies, certainly has its place.

Most importantly, though, is this simple reminder: Messenger Kids isn’t (just) screen time, it’s social time. And as with most new social situations, from playgrounds to pools, parent and caregiver supervision helps young people understand, navigate and make the most of those situations. The inverse is true, too: a lack of discussion about new spaces and situations will mean that the chances of kids getting into awkward, difficult, or even dangerous situations goes up exponentially.

Messenger Kids isn’t just making Facebook feel normal, familiar and safe for kids. It’s part of Facebook’s long game in terms of staying relevant, while all of Facebook’s existing issues remain.

Tama Leaver is an Associate Professor in the Department of Internet Studies at Curtin University in Perth, Western Australia.

[This piece was originally published on the ABC News website.]

Strategies for Developing a Scholarly Web Presence During a Higher Degree

As part of the Curtin Humanities Research Skills and Careers Workshops 2015 I recently facilitated a workshop entitled Strategies for Developing a Scholarly Web Presence During a Higher Degree. As the workshop received a very positive response and addressed a number of strategies and issues that participants had not addressed previously, I thought I’d share the slides here in case they’re of use to others.

For more context regarding scholarly use of social media in particular, it’s worth checking out Deborah Lupton’s 2014 report ‘Feeling Better Connected’: Academics’ Use of Social Media.

Facebook in Education: Special Issue of Digital Culture & Education

I’m pleased to announced that the special themed issue of Digital Culture and Education on Facebook in Education, edited by Mike Kent and I, has been released. The issue features an introductory article by Mike and I, ‘Facebook in Education: Lessons Learnt’ in which we may have some opinions about whether the hype around MOOCs and disruptive online education ignores the very long history of learning online (hint: it does). As something of a corrective to that hype, this issue explores different aspects of the complicated relationship between Facebook as a platform and learning and teaching in higher education.

This issue features ‘“Face to face” Learning from others in Facebook Groups‘ by Eleanor Sandry, ‘Exploiting fluencies: Educational expropriation of social networking site consumer training‘ by Lucinda Rush and D.E. Wittkower, ‘Learning or Liking: Educational architecture and the efficacy of attention‘ by Leanne McRae, and ‘Separating Work and Play: Privacy, Anonymity and the Politics of Interactive Pedagogy in Deploying Facebook in Learning and Teaching’ by Rob Cover.

Also, watch this space in about a month for the related and slightly larger related work in the same area.

Is Facebook finally taking anonymity seriously?

By Tama Leaver, Curtin University and Emily van der Nagel, Swinburne University of Technology

Having some form of anonymity online offers many people a kind of freedom. Whether it’s used for exposing corruption or just experimenting socially online it provides a way for the content (but not its author) to be seen.

But this freedom can also easily be abused by those who use anonymity to troll, abuse or harass others, which is why Facebook has previously been opposed to “anonymity on the internet”.

So in announcing that it will allow users to log in to apps anonymously, is Facebook is taking anonymity seriously?

Real identities on Facebook

CEO Mark Zuckerberg has been committed to Facebook as a site for users to have a single real identity since its beginning a decade ago as a platform to connect college students. Today, Facebook’s core business is still about connecting people with those they already know.

But there have been concerns about what personal information is revealed when people use any third-party apps on Facebook.

So this latest announcement aims to address any reluctance some users may have to sign in to third-party apps. Users will soon be able to log in to them without revealing any of their wealth of personal information.

That does not mean they will be anonymous to Facebook – the social media site will still track user activity.

It might seem like the beginning of a shift away from singular, fixed identities, but tweaking privacy settings hardly indicates that Facebook is embracing anonymity. It’s a long way from changing how third-party apps are approached to changing Facebook’s entire real-name culture.

Facebook still insists that “users provide their real names and information”, which it describes as an ongoing “commitment” users make to the platform.

Changing the Facebook experience?

Having the option to log in to third-party apps anonymously does not necessarily mean Facebook users will actually use it. Effective use of Facebook’s privacy settings depends on user knowledge and motivation, and not all users opt in.

A recent Pew Research Center report reveals that the most common strategy people use to be less visible online is to clear their cookies and browser history.

Only 14% of those interviewed said they had used a service to browse the internet anonymously. So, for most Facebook users, their experience won’t change.

Facebook login on other apps and websites

Facebook offers users the ability to use their authenticated Facebook identity to log in to third-party web services and mobile apps. At its simplest and most appealing level, this alleviates the need for users to fill in all their details when signing up for a new app. Instead they can just click the “Log in with Facebook” button.

For online corporations whose businesses depend on building detailed user profiles to attract advertisers, authentication is a real boon. It means they know exactly what apps people are using and when they log in to them.

Automated data flows can often push information back into the authenticating service (such as the music someone is playing on Spotify turning up in their Facebook newsfeed).

While having one account to log in to a range of apps and services is certainly handy, this convenience means it’s almost impossible to tell what information is being shared.

Is Facebook just sharing your email address and full name, or is it providing your date of birth, most recent location, hometown, a full list of friends and so forth? Understandably, this again raises privacy concerns for many people.

How anonymous login works

To address these concerns, Facebook is testing anonymous login as well as a more granular approach to authentication. (It’s worth noting, neither of these changes have been made available to users yet.)

Given the long history of privacy missteps by Facebook, the new login appears to be a step forward. Users will be told what information an app is requesting, and have the option of selectively deciding which of those items Facebook should actually provide.

Facebook will also ask users whether they want to allow the app to post information to Facebook on their behalf. Significantly, this now places the onus on users to manage the way Facebook shares their information on their behalf.

In describing anonymous login, Facebook explains that:

Sometimes people want to try out apps, but they’re not ready to share any information about themselves.

It’s certainly useful to try out apps without having to fill in and establish a full profile, but very few apps can actually operate without some sort of persistent user identity.

The implication is once a user has tested an app, to use its full functionality they’ll have to set up a profile, probably by allowing Facebook to share some of their data with the app or service.

Taking on the competition

The value of identity and anonymity are both central to the current social media war to gain user attention and loyalty.

Facebook’s anonymous login might cynically be seen as an attempt to court users who have flocked to Snapchat, an app which has anonymity built into its design from the outset.

Snapchat’s creators famously turned down a US$3 billion buyout bid from Facebook. Last week it also revealed part of its competitive plan, an updated version of Snapchat that offers seamless real-time video and text chat.

By default, these conversations disappear as soon as they’ve happened, but users can select important items to hold on to.

Whether competing with Snapchat, or any number of other social media services, Facebook will have to continue to consider the way identity and anonymity are valued by users. At the moment its flirting with anonymity is tokenistic at best.

![]()

Tama Leaver receives funding from the Australian Research Council (ARC).

Emily van der Nagel does not work for, consult to, own shares in or receive funding from any company or organisation that would benefit from this article, and has no relevant affiliations.

This article was originally published on The Conversation. Read the original article.

Olympic Trolls: Mainstream Memes and Digital Discord?

The themed issue of the Fibreculture journal on Trolls and the negative space of the internet has been released, with a raft of amazing articles, including my piece ‘Olympic Trolls: Mainstream Memes and Digital Discord?’ looking at the mainstreaming of some techniques associated with trolling, using a case study of a Facebook group lamenting the quality of Channel 9’s coverage of the 2012 Olympics.

The themed issue of the Fibreculture journal on Trolls and the negative space of the internet has been released, with a raft of amazing articles, including my piece ‘Olympic Trolls: Mainstream Memes and Digital Discord?’ looking at the mainstreaming of some techniques associated with trolling, using a case study of a Facebook group lamenting the quality of Channel 9’s coverage of the 2012 Olympics.

The abstract:

While the mainstream press have often used the accusation of trolling to cover almost any form of online abuse, the term itself has a long and changing history. In scholarly work, trolling has morphed from a description of newsgroup and discussion board commentators who appeared genuine but were actually just provocateurs, through to contemporary analyses which focus on the anonymity, memes and abusive comments most clearly represented by users of the iconic online image board 4chan, and, at times, the related Anonymous political movement. To explore more mainstream examples of what might appear to be trolling at first glance, this paper analyses the Channel Nine Fail (Ch9Fail) Facebook group which formed in protest against the quality of the publicly broadcast Olympic Games coverage in Australia in 2012. While utilising many tools of trolling, such as the use of memes, deliberately provocative humour and language, targeting celebrities, and attempting to provoke media attention, this paper argues that the Ch9Fail group actually demonstrates the increasingly mainstream nature of many online communication strategies once associated with trolls. The mainstreaming of certain activities which have typified trolling highlight these techniques as part of a more banal everyday digital discourse; despite mainstream media presenting trolls are extremist provocateurs, many who partake in trolling techniques are simply ordinary citizens expressing themselves online.

The full paper is freely available online, and as a PDF.

Birth, Death and Facebook

Last week, as the inaugural paper in CCAT’s new seminar series Adventures in Culture in Technology (ACAT), I presented a more in depth, although still in progress, talk based on a paper I’m finishing on Facebook and the questions of birth and death. Here’s the slides along with recorded audio if you’re interested:

The talk abstract: While social media services including the behemoth Facebook with over a billion users, promote and encourage the ongoing creation, maintenance and performance of an active online self, complete with agency, every act of communication is also recorded. Indeed, the recordings made by other people about ourselves can reveal more than we actively and consciously chose to reveal about ourselves. The way people influence the identity and legacy of others is particularly pronounced when we consider birth – how parents and others ‘create’ an individual online before that young person has any identity in their online identity construction – and at death, when a person ceases to have agency altogether and becomes exclusively a recorded and encoded data construct. This seminar explores the limits and implications for agency, identity and data personhood in the age of Facebook.